This HTML version of Think Complexity, 2nd Edition is provided for convenience, but it is not the best format of the book. In particular, some of the symbols are not rendered correctly.

You might prefer to read the PDF version.

Chapter 12 Evolution of cooperation

In this final chapter, I take on two questions, one from biology and one from philosophy:

- In biology, the “problem of altruism" is the apparent conflict between natural selection, which suggests that animals live in a state of constant competition, and altruism, which is the tendency of many animals to help other animals, even to their own detriment. See http://thinkcomplex.com/altruism.

- In moral philosophy, the question of human nature asks whether humans are fundamentally good, or evil, or blank states shaped by their environment. See http://thinkcomplex.com/nature.

The tools I use to address these questions are agent-based simulation (again) and game theory, which is a set of abstract models meant to describe ways agents interact. Specifically, the game we will consider is the Prisoner’s Dilemma.

The code for this chapter is in chap12.ipynb, which is a

Jupyter notebook in the repository for this book. For more information

about working with this code, see Section ??.

12.1 Prisoner’s Dilemma

The Prisoner’s Dilemma is a topic in game theory, but it’s not the fun kind of game. Instead, it is the kind of game that sheds light on human motivation and behavior. Here is the presentation of the dilemma from Wikipedia (http://thinkcomplex.com/pd):

Two members of a criminal gang are arrested and imprisoned. Each prisoner is in solitary confinement with no means of communicating with the other. The prosecutors lack sufficient evidence to convict the pair on the principal charge, but they have enough to convict both on a lesser charge. Simultaneously, the prosecutors offer each prisoner a bargain. Each prisoner is given the opportunity to either: (1) betray the other by testifying that the other committed the crime, or (2) cooperate with the other by remaining silent. The offer is:

- If A and B each betray the other, each of them serves 2 years in prison.

- If A betrays B but B remains silent, A will be set free and B will serve 3 years in prison (and vice versa).

- If A and B both remain silent, both of them will only serve 1 year in prison (on the lesser charge).

Obviously, this scenario is contrived, but it is meant to represent a variety of interactions where agents have to choose whether to “cooperate" with each other or “defect", and where the reward (or punishment) for each agent depends on what the other chooses.

With this set of punishments, it is tempting to say that the players should cooperate, that is, that both should remain silent. But neither agent knows what the other will do, so each has to consider two possible outcomes. First, looking at it from A’s point of view:

- If B remains silent, A is better off defecting; she would go free rather than serve 1 year.

- If B defects, A is still better off defecting; she would serve only 2 years rather than 3.

No matter what B does, A is better off defecting. And because the game is symmetric, this analysis is the same from B’s point of view: no matter what A does, B is better off defecting.

In the simplest version of this game, we assume that A and B have no other considerations to take into account. They can’t communicate with each other, so they can’t negotiate, make promises, or threaten each other. And they consider only the immediate goal of minimizing their sentences; they don’t take into account any other factors.

Under those assumptions, the rational choice for both agents is to defect. That might be a good thing, at least for purposes of criminal justice. But for the prisoners, it is frustrating because there is, apparently, nothing they can do to achieve the outcome they both want. And this model applies to other scenarios in real life where cooperation would be better for the greater good as well as for the players.

Studying these scenarios, and ways to escape from the dilemma, is the focus of people who study game theory, but it is not the focus of this chapter. We are headed in a different direction.

12.2 The problem of nice

Since the Prisoner’s Dilemma was first discussed in the 1950s, it has been a popular topic of study in social psychology. Based on the analysis in the previous section, we can say what a perfectly rational agent should do; it is harder to predict what real people actually do. Fortunately, the experiment has been done1.

If we assume that people are smart enough to do the analysis (or understand it when explained), and that they generally act in their own interest, we would expect them to defect pretty much all the time. But they don’t. In most experiments, subjects cooperate much more than the rational agent model predicts2.

The most obvious explanation of this result is that people are not rational agents, which should not be a surprise to anyone. But why not? Is it because they are not smart enough to understand the scenario or because they are knowingly acting contrary to their own interest?

Based on experimental results, it seems that at least part of the explanation is plain altruism: many people are willing to incur a cost to themselves in order to benefit another person. Now, before you nominate that conclusion for publication in the Journal of Obvious Results, let’s keep asking why:

- Why do people help other people, even at a cost to themselves? At least part of the reason is that they want to; it makes them feel good about themselves and the world.

- And why does being nice make people feel good? It might be tempting to say that they were raised right, or more generally trained by society to want to do good things. But there is little doubt that some part of altruism is innate; a proclivity for altruism is the result of normal brain development.

- Well, why is that? The innate parts of brain development, and the personal characteristics that follow, are the result of genetic information. Of course, the relationship between genes and altruism is complicated; there are probably many genes that interact with each other and with environmental factors to cause people to be more or less altruistic in different circumstances. Nevertheless, there are almost certainly genes that tend to make people altruistic.

- Finally, why is that? If, under natural selection, animals are in constant competition with each other to survive and reproduce, it seems obvious that altruism would be counterproductive. In a population where some people help others, even to their own detriment, and others are purely selfish, it seems like the selfish ones would benefit, the altruistic ones would suffer, and the genes for altruism would be driven to extinction.

This apparent contradiction is the “problem of altruism": why haven’t the genes for altruism died out?

Among biologists, there are many possible explanations, including reciprocal altruism, sexual selection, kin selection, and group selection. Among non-scientists, there are even more explanations. I leave it to you to explore the alternatives; for now I want to focus on just one explanation, arguably the simplest one: maybe altruism is adaptive. In other words, maybe genes for altruism make people more likely to survive and reproduce.

It turns out that the Prisoner’s Dilemma, which raises the problem of altruism, might also help resolve it.

12.3 Prisoner’s dilemma tournaments

In the late 1970s Robert Axelrod, a political scientist at the University of Michigan, organized a tournament to compare strategies for playing Prisoner’s Dilemma (PD).

He invited participants to submit strategies in the form of computer programs, then played the programs against each other and kept score. Specifically, they played the iterated version of PD, in which the agents play multiple rounds against the same opponent, so their decisions can be based on history.

In Axelrod’s tournaments, a simple strategy that did surprisingly well was called “tit for tat", or TFT. TFT always cooperates during the first round of an iterated match; after that, it copies whatever the opponent did during the previous round. If the opponent keeps cooperating, TFT keeps cooperating. If the opponent defects at any point, TFT defects in the next round. But if the opponent goes back to cooperating, so does TFT.

For more information about these tournaments, and an explanation of why TFT does so well, see this video: http://thinkcomplex.com/pdvid2.

Looking at the strategies that did well in these tournaments, Alexrod identified the characteristics they tended to share:

- Nice: The strategies that do well cooperate during the first round, and generally cooperate as often as they defect in subsequent rounds.

- Retaliating: Strategies that cooperate all the time did not do as well as strategies that retaliate if the opponent defects.

- Forgiving: But strategies that were too vindictive tended to punish themselves as well as their opponents.

- Non-envious: Some of the most successful strategies seldom outscore their opponents; they are successful because they do well enough against a wide variety of opponents.

TFT has all of these properties.

Axelrod’s tournaments offer a partial, possible answer to the problem of altruism: maybe the genes for altruism are prevalent because they are adaptive. To the degree that many social interactions can be modeled as variations on the Prisoner’s Dilemma, a brain that is wired to be nice, tempered by a balance of retaliation and forgiveness, will tend to do well in a wide variety of circumstances.

But the strategies in Axelrod’s tournaments were designed by people; they didn’t evolve. We need to consider whether it is credible that genes for niceness, retribution, and forgiveness could appear by mutation, successfully invade a population of other strategies, and resist being invaded by subsequent mutations.

12.4 Simulating evolution of cooperation

Evolution of Cooperation is the title of the first book where Axelrod presented results from Prisoner’s Dilemma tournaments and discussed the implications for the problem of altruism. Since then, he and other researchers have explored the evolutionary dynamics of PD tournaments, that is, how the distribution of strategies changes over time in a population of PD contestants. In the rest of this chapter, I run a version of those experiments and present the results.

First, we’ll need a way to encode a PD strategy as a genotype. For this experiment, I consider strategies where the agent’s choice in each round depends only on the opponent’s choice in the previous two rounds. I represent a strategy using a dictionary that maps from the opponent’s previous two choices to the agent’s next choice.

Here is the class definition for these agents:

| class Agent: keys = [(None, None), (None, 'C'), (None, 'D'), ('C', 'C'), ('C', 'D'), ('D', 'C'), ('D', 'D')] def __init__(self, values, fitness=np.nan): self.values = values self.responses = dict(zip(self.keys, values)) self.fitness = fitness |

keys is the sequence of keys in each agent’s dictionary, where the tuple ('C', 'C') means that the opponent cooperated in the previous two rounds; (None, 'C') means that only one round has been played and the opponent cooperated; and (None, None) means that no rounds have been played.

In the __init__ method, values is a sequence of choices, either 'C' or 'D', that correspond to keys. So if the first element of values is 'C', that means that this agent will cooperate in the first round. If the last element of values is 'D', this agent will defect if the opponent defected in the previous two rounds.

In this implementation, the genotype of an agent who always defects is 'DDDDDDD'; the genotype of an agent who always cooperates is 'CCCCCCC', and the genotype for TFT is 'CCDCDCD'.

The Agent class provides copy, which makes another agent with the same genotype, but with some probability of mutation:

| def copy(self, prob_mutate=0.05): if np.random.random() > prob_mutate: values = self.values else: values = self.mutate() return Agent(values, self.fitness) |

Mutation works by choosing a random value in the genotype and flipping from 'C' to 'D', or vice versa:

| def mutate(self): values = list(self.values) index = np.random.choice(len(values)) values[index] = 'C' if values[index] == 'D' else 'D' return values |

Now that we have agents, we need a tournament.

12.5 The Tournament

The Tournament class encapsulates the details of the PD competition:

| payoffs = {('C', 'C'): (3, 3), ('C', 'D'): (0, 5), ('D', 'C'): (5, 0), ('D', 'D'): (1, 1)} num_rounds = 6 def play(self, agent1, agent2): agent1.reset() agent2.reset() for i in range(self.num_rounds): resp1 = agent1.respond(agent2) resp2 = agent2.respond(agent1) pay1, pay2 = self.payoffs[resp1, resp2] agent1.append(resp1, pay1) agent2.append(resp2, pay2) return agent1.score, agent2.score |

payoffs is a dictionary that maps from the agents’ choices to their rewards. For example, if both agents cooperate, they each get 3 points. If one defects and the other cooperates, the defector gets 5 and the cooperator gets 0. If they both defect, each gets 1. These are the payoffs Axelrod used in his tournaments.

The play method runs several rounds of the PD game. It uses the following methods from the Agent class:

reset: Initializes the agents before the first round, resetting their scores and the history of their responses.respond: Asks each agent for their response, given the opponent’s previous responses.append: Updates each agent by storing the choices and adding up the scores from successive rounds.

After the given number of rounds, play returns the total score for each agent. I chose num_rounds=6 so that each element of the genotype is accessed with roughly the same frequency. The first element is only accessed during the first round, or one sixth of the time. The next two elements are only accessed during the second round, or one twelfth each. The last four elements are accessed four of six times, or one sixth each, on average.

Tournament provides a second method, melee, that determines which agents compete against each other:

| def melee(self, agents, randomize=True): if randomize: agents = np.random.permutation(agents) n = len(agents) i_row = np.arange(n) j_row = (i_row + 1) % n totals = np.zeros(n) for i, j in zip(i_row, j_row): agent1, agent2 = agents[i], agents[j] score1, score2 = self.play(agent1, agent2) totals[i] += score1 totals[j] += score2 for i in i_row: agents[i].fitness = totals[i] / self.num_rounds / 2 |

melee takes a list of agents and a boolean, randomize, that determines whether each agent fights the same neighbors every time, or whether the pairings are randomized.

i_row and j_row contain the indices of the pairings. totals contains the total score of each agent.

Inside the loop, we select two agents, invoke play, and update totals. At the end, we compute the average number of points each agent got, per round and per opponent, and store the results in the fitness attribute of each agent.

12.6 The Simulation

The Simulation class for this chapter is based on the one in Section ??; the only differences are in __init__ and step.

Here’s the __init__ method:

| class PDSimulation(Simulation): def __init__(self, tournament, agents): self.tournament = tournament self.agents = np.asarray(agents) self.instruments = [] |

A Simulation object contains a Tournament object, a sequence of agents, and a sequence of Instrument objects (as in Section ??).

Here’s the step method:

| def step(self): self.tournament.melee(self.agents) Simulation.step(self) |

This version of step uses Tournament.melee, which sets the fitness attribute for each agent; then it calls the step method from the Simulation class, reproduced here:

| # class Simulation def step(self): n = len(self.agents) fits = self.get_fitnesses() # see who dies index_dead = self.choose_dead(fits) num_dead = len(index_dead) # replace the dead with copies of the living replacements = self.choose_replacements(num_dead, fits) self.agents[index_dead] = replacements # update any instruments self.update_instruments() |

Simulation.step collects the agents’ fitnesses in an array; then it calls choose_dead to decide which agents die, and choose_replacements to decide which agents reproduce.

My simulation includes differential survival, as in Section ??, but not differential reproduction. You can see the details in the notebook for this chapter. As one of the exercises, you will have a chance to explore the effect of differential reproduction.

12.7 Results

Suppose we start with a population of three agents: one always cooperates, one always defects, and one plays the TFT strategy. If we run Tournament.melee with this population, the cooperator gets 1.5 points per round, the TFT agent gets 1.9, and the defector gets 3.33. This result suggests that “always defect" should quickly become the dominant strategy.

But “always defect" contains the seeds of its own destruction. If nicer strategies are driven to extinction, the defectors have no one to take advantage of. Their fitness drops, and they become vulnerable to invasion by cooperators.

Based on this analysis, it is not easy to predict how the system will behave: will it find a stable equilibrium, or oscillate between various points in the genotype landscape? Let’s run the simulation and find out!

I start with 100 identical agents who always defect, and run the simulation for 5000 steps:

| tour = Tournament() agents = make_identical_agents(100, list('DDDDDDD')) sim = PDSimulation(tour, agents) |

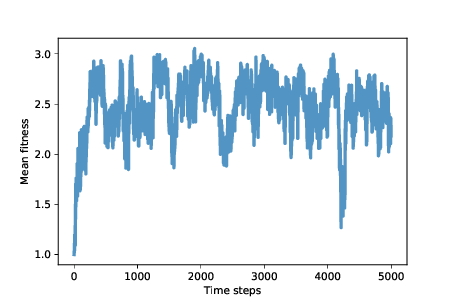

Figure 12.1: Average fitness (points scored per round of Prisoner’s Dilemma).

Figure ?? shows mean fitness over time (using the MeanFitness instrument from Section ??). Initially mean fitness is 1, because when defectors face each other, they get only 1 point each per round.

After about 500 time steps, mean fitness increases to nearly 3, which is what cooperators get when they face each other. However, as we suspected, this situation in unstable. Over the next 500 steps, mean fitness drops below 2, climbs back toward 3, and continues to oscillate.

The rest of the simulation is highly variable, but with the exception of one big drop, mean fitness is usually between 2 and 3, with the long-term mean close to 2.5.

And that’s not bad! It’s not quite a utopia of cooperation, which would average 3 points per round, but it’s a long way from the dystopia of perpetual defection. And it’s a lot better than what we might expect from the natural selection of self-interested agents.

To get some insight into this level of fitness, let’s look at a few more instruments. Niceness measures the fraction of cooperation in the genotypes of the agents after each time step:

| class Niceness(Instrument): def update(self, sim): responses = np.array([agent.values for agent in sim.agents]) metric = np.mean(responses == 'C') self.metrics.append(metric) |

responses is an array with one row for each agent and one column for each element of the genome. metric is the fraction of elements that are 'C', averaged across agents.

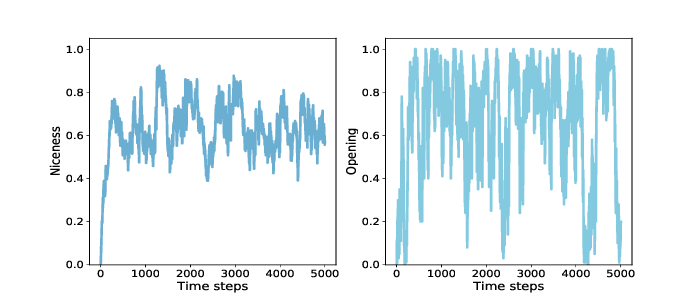

Figure 12.2: Average niceness across all genomes in the population (left), and fraction of population that cooperates in the first round (right).

Figure ?? (left) shows the results: starting from 0, average niceness increases quickly to 0.75, then oscillates between 0.4 and 0.85, with a long-term mean near 0.65. Again, that’s a lot of niceness!

Looking specifically at the opening move, we can track the fraction of agents that cooperate in the first round. Here’s the instrument:

| class Opening(Instrument): def update(self, sim): responses = np.array([agent.values[0] for agent in sim.agents]) metric = np.mean(responses == 'C') self.metrics.append(metric) |

Figure ?? (right) shows the results, which are highly variable. The fraction of agents who cooperate in the first round is often near 1, and occasionally near 0. The long-term average is close to 0.65, similar to overall niceness. These results are consistent with Axelrod’s tournaments; in general, nice strategies do well.

The other characteristics Axelrod identifies in successful strategies are retaliation and forgiveness. To measure retaliation, I define this instrument:

| class Retaliating(Instrument): def update(self, sim): after_d = np.array([agent.values[2::2] for agent in sim.agents]) after_c = np.array([agent.values[1::2] for agent in sim.agents]) metric = np.mean(after_d=='D') - np.mean(after_c=='D') self.metrics.append(metric) |

Retaliating compares the number of elements in all genomes where an agent defects after the opponent defects (elements 2, 4, and 6) with the number of places where an agents defects after the opponent cooperates. As you might expect by now, the results vary substantially (you can see the graph in the notebook). On average the difference between these fractions is less than 0.1, so if agents defect 30% of the time after the opponent cooperates, they might defect 40% of the time after a defection.

This result provides weak support for the claim that successful strategies retaliate. But maybe it’s not necessary for all agents, or even many, to be retaliatory; if there is at least some tendency toward retaliation in the population as a whole, that might be enough to prevent high-defection strategies from gaining ground.

To measure forgiveness, I define one more instrument to see whether agents might be more likely to cooperate after D-C in the previous two rounds, compared to C-D. In my simulations, there is no evidence for this particular kind of forgiveness. On the other hand, the strategies in these simulations are necessarily forgiving because they consider only the previous two rounds of history. In this context, forgetting is a kind of forgiving.

12.8 Conclusions

Axelrod’s tournaments suggest a possible resolution to the problem of altruism: maybe being nice, but not too nice, is adaptive. But the strategies in the original tournaments were designed by people, not evolution, and the distribution of strategies did not change over the course of the tournaments.

So that raises a question: strategies like TFT might do well in a fixed population of human-designed strategies, but can they evolve? In other words, can they appear in a population through mutation, compete successfully with their ancestors, and resist invasion by their descendants?

The simulations in this chapter suggest:

- Populations of defectors are vulnerable to invasion by nicer strategies.

- Populations that are too nice are vulnerable to invasion by defectors.

- As a result, the average level of niceness oscillates, but the average amount of niceness is generally high, and the average level of fitness is generally closer to a utopia of cooperation than to a dystopia of defection.

- TFT, which was a successful strategy in Alexrod’s tournaments, does not seem to be a specially optimal strategy in an evolving population. In fact, there is probably no stable optimal strategy.

- Some degree of retaliation may be adaptive, but it might not be necessary for all agents to retaliate. If there is enough retaliation in the population as a whole, that might be enough to prevent invasion by defectors3.

Obviously, the agents in these simulations are simple, and the Prisoner’s Dilemma is a highly abstract model of a limited range of social interactions. Nevertheless, the results in this chapter provide some insight into human nature. Maybe our inclinations toward cooperation, retaliation, and forgiveness are innate, at least in part. These characteristics are a result of how our brains are wired, which is controlled by our genes, at least in part. And maybe our genes build our brains that way because over the history of human evolution, genes for less altruistic brains were less likely to propagate.

Maybe that’s why selfish genes build altruistic brains.

12.9 Exercises

The code for this chapter is in the Jupyter notebook chap12.ipynb in the repository for this book. Open the notebook, read the code, and run the cells. You can use this notebook to work on the following exercises. My solutions are in chap12soln.ipynb.

The simulations in this chapter depend on conditions and parameters I chose arbitrarily. As an exercise, I encourage you to explore other conditions to see what effect they have on the results. Here are some suggestions:

- Vary the initial conditions: instead of starting with all defectors, see what happens if you start with all cooperators, all TFT, or random agents.

- In

Tournament.melee, I shuffle the agents at the beginning of each time step, so each agent plays against two randomly-chosen agents. What happens if you don’t shuffle? In that case, each agent plays against the same neighbors repeatedly. That might make it easier for a minority strategy to invade a majority, by taking advantage of locality. - Since each agent only plays against two other agents, the outcome of each round is highly variable: an agent that would do well against most other agents might get unlucky during any given round, or the other way around. What happens if you increase the number of opponents each agent plays against during each round? Or what if an agent’s fitness at the end of each step is the average of its current score and its fitness at the end of the previous round?

- The function I chose for

prob_survivalvaries from 0.7 to 0.9, so the least fit agent, withp=0.7, lives for 3.33 time steps on average, and the most fit agent lives for 10 time steps. What happens if you make the degree of differential survival more or less “aggressive"? - I chose

num_rounds=6so that each element of the genome has roughly the same impact on the outcome of a match. But that is substantially shorter than what Alexrod used in his tournaments. What happens if you increasenum_rounds? Note: if you explore the effect of this parameter, you might want to modifyNicenessto measure the niceness of the last 4 elements of the genome, which will be under more selective pressure asnum_roundsincreases. - My implementation has differential survival but not differential reproduction. What happens if you add differential reproduction?

In my simulations, the population never converges to a state where a majority share the same, presumably optimal, genotype. There are two possible explanations for this outcome: one is that there is no optimal strategy, because whenever the population is dominated by a majority genotype, that condition creates an opportunity for a minority to invade; the other possibility is that the mutation rate is high enough to maintain a diversity of genotypes.

To distinguish between these explanations, try lowering the mutation rate to see what happens. Alternatively, start with a random population and run without mutation until only one genotype survives. Or run with mutation until the system reaches something like a steady state; then turn off mutation and run until there is only one surviving genotype. What are the characteristics of the genotypes that prevail in these conditions?

The agents in my experiment are “reactive” in the sense that their choice during each round depends only on what the opponent did during previous rounds. Explore strategies that also take into account the agent’s past choices. These strategies can distinguish an opponent who retaliates from an opponent who defects without provocation.

- 1

- Here’s a recent report with references to previous experiments: Barreda-Tarrazona, Jaramillo-Gutiérrez, Pavan, and Sabater-Grande, “Individual Characteristics vs. Experience: An Experimental Study on Cooperation in Prisoner’s Dilemma", Frontiers in Psychology, 2017; 8: 596. http://thinkcomplex.com/pdexp.

- 2

- For an excellent video summarizing what we have discussed so far, see http://thinkcomplex.com/pdvid1.

- 3

- And that introduces a whole new topic in game theory, the free-rider problem (see http://thinkcomplex.com/rider)

Contribute

If you would like to make a contribution to support my books, you can use the button below and pay with PayPal. Thank you!

Are you using one of our books in a class?

We'd like to know about it. Please consider filling out this short survey.